Adding docker support into gradle plugin with testcontainers

Preface

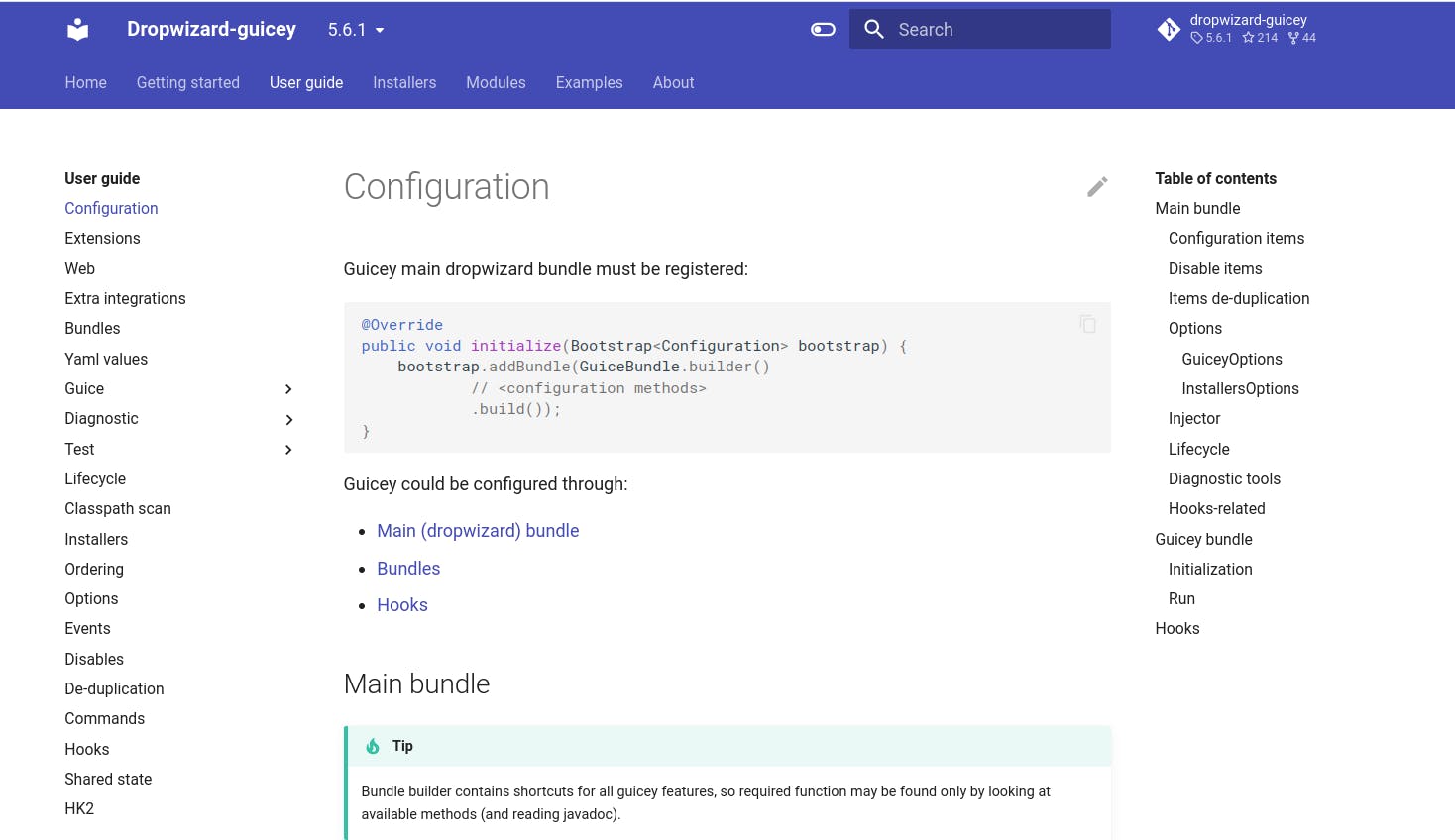

Several years ago I was searching for a simple (but pretty) documentation tool for my open source projects. Eventually, I stopped on mkdocs with material theme:

But, it is a python tool and I need to use it in gradle. I liked the tool so much that decided to build a gradle plugin for it. I thought, It would be logical to implement basic python support in a separate plugin, just in case if I would decide to do a plugin for some other python module later. That's how plugins were born:

(going a bit forward, splitting plugin was a right move - python plugin appears to be even more popular then specialized mkdocs plugin)

The basic idea was to use python the same way as we use java: install it once manually and allow plugin to automate dependencies management. There is a virtualenv module in python, allowing creation of isolated environments where we can install project-specific modules without affecting global python installation (very like global/local npm modules).

Eventually, there appears to be many-many python-related problems with os-specific execution, differences between python versions (and even the way it was installed), but this article is not about it (maybe next time).

Once, I was asked if it is possible to avoid local python installation and use docker container instead. First, I thought "no, no-no-no, no way" and wasn't going to do anything, but, as usual, then I got curious "why not?" (besides, python provides official python images).

Why testcontainers

Initially, I thought to use docker-java, but it is a low-level api almost without documentation. To speed up prototyping phase (I wasn't ready for complete plugin rewrite and so must be sure that it is possible to introduce docker in relatively simple way), I used testcontainers instead (which, actually, build above docker-java).

If you have never heard about it: testcontainers is a testing library (with junit, spock etc. integrations) providing easy container management in tests (starting fresh database, redis or anything else). I've been using it for several years already and it's a real life saver for integration tests. And that's why I thought about it (and, by the way, I know about mkdocs exactly because testcontainers use mkdocs for documentation).

Testcontainers provide really simple api for starting a container:

new GenericContainer(DockerImageName.parse("python:3.10.8-alpine3.15"))

.withCommand("python", "--verson")

.withStartupTimeout(Duration.ofSeconds(1))

.start()

This code will download python image (~40mb) start python --version and exit.

It quickly appears that my need is pretty much the same as in integration test: start docker, do something and make sure it will be stopped after. Docker-java is too generic (I would have to implement many things proveded in testcontainers out of the box).

One important aspect is that testcontainers use additional specialized container (ryuk) which would guarantee proper shutdown for started containers. This might not be obvious, but stale containers are a pain, especially if they use port bindings and so would prevent consequent runs and require users to do manual investigations and cleanups. In the case of gradle, crashes are possible. Moreover, tasks may run infinite python processes (like mkdocs dev server) which might only be stopped forcefully and without proper container cleanup this would be a problem.

This also implies stateless containers usage: you can't expect container state to be preserved between restarts. Not a big problem in my case, as I can simply store environment inside mapped directory (the same for commands output). From the other side, stateful containers would also become a problem: possible damaged state, requiring additional user actions to flush that state.

A little downside of using testcontainers is that you'll have to keep junit dependency in classpath - testcontainers is a test tool at first hand, and wasn't supposed to be used outside of tests.

Container OS

After looking at official python image page and seeing windows-based images (like 3.10.8-windowsservercore-1809) I was hoping to be able to run windows containers on linux (naive me). Of course, it's not possible.

In general, you can run linux containers on linux (and macos) and windows containers on windows. But, actually, docker client on windows is installed with WSL2 support by default and so all linux containers work on windows (of course, linux containers were working before WSL, but with WSL it works on all windows versions).

In order to use windows containers, you'll need windows PRO (Home does not support this!) and in the tray docker icon switch to "Use windows containers". Since that moment only windows containers would work.

On linux, windows containers would not work (in theory, it could work with windows virtualization (like windows do to run linux containers), but it will quickly face windows license problem, so not sure it would ever happen).

As you can see, you can target only linux containers and it would work everywhere! Windows containers are too exotic.

And, going back to testcontainers, they are limited to linux containers only because ryuk (special container used for proper shutdown) does not provide windows version and so testcontainers would not be able to run with windows containers (but it might someday).

Looking through the prism of the gradle plugin, a single OS is a plus, because you don't have to support different commands (everything is obviously different for windows and linux). I added theoretical windows support in my plugin for the future, but not sure this would ever be useful (I know about windows specifics too late).

CI

Need to mention one testcontainers limitation: they are not working currently on windows CI servers like Appveyour. The problem is that such servers are based on Windows Servers which is not supported by testcontainers.

The only known compatible windows CI is azure pipelines. But its free plan is very limited.

On linux CI there are no problems. For example, github actions do not even require any additional configurations.

File sharing

Another important aspect is file sharing. In case of gradle plugin, I simply mount entire project folder into container (and automatically rewrite python command to match correct paths):

new GenericContainer(DockerImageName.parse("python:3.10.8-alpine3.15"))

.withFileSystemBind("/local/path/project-name", "/usr/src/project-name", BindMode.READ_WRITE)

...

But there is a problem: container works with a root user and so creates all files as root. On windows and macos, docker volumes are implemented using network mount and so files created inside container (inside volume) with root user are correctly remapped into current user.

On linux - files created inside container would preserve root and so you'll need to use sudo to do anything with them. As an example, if your command, executed inside a container, would write anything inside the project build directory then gradlew clean would fail to execute (due to not enough rights).

To workaround this problem, I execute chown inside the container just after python execution to correct rights for all created files. Note that target user does not need to exist inside the container when performing chown with uid.

To avoid current user uid resolution, I used uid (and gid) from project root folder (which certainly must be good enough):

Path projectDir = project.rootProject.rootDir.toPath()

int uid = (int) Files.getAttribute(projectDir, "unix:uid", LinkOption.NOFOLLOW_LINKS)

int gid = (int) Files.getAttribute(projectDir, "unix:gid", LinkOption.NOFOLLOW_LINKS)

dockerExec(new String[]{"chown", "-Rh", uid+":"+gid, dir.toAbsolutePath()})

As python plugin is generic, I have to add public dockerChown(path) method directly to python task, so it would be possible to use it in doLast hook:

task sample(type: PythonTask) {

command = '-c "with open(\'build/temp.txt\', \'w+\') as f: pass"'

doLast {

dockerChown 'build/temp.txt'

}

}

Also note that on windows operations on mounted files would perform slower. This is a known WSL2 limitation. It is not a big problem for a few files used, but a serious problem for hundreds or thousands of files. If you have windows PRO, you can try to disable WSL2 support in settings, switching to pure Hyper-V which should be much better.

Execution

There are two possible ways of command execution.

Container command

Command could be specified as container command:

try {

GenericContainer container = new GenericContainer(...)

.withStartupTimeout(Duration.ofSeconds(1))

.withCommand("python", "--verson")

.withLogConsumer(...)

.start()

} catch (Exception ex) {

// if command execution failed it would mean container startup fail and so exception would

// be thrown. But also, testcontainers would log error and entire output

}

In this case, the container would execute command immediately after start and would be shut down after command execution.

Note that .start() would not wait when command execution finished, so you'll have to manually wait for container stopping:

while (container.isRunning() && !Thread.currentThread().isInterrupted()) {

sleep(300)

}

(in case of gradle, you can't let task finish execution until docker command would be finished)

The downside is that we will have to lose some time starting and stopping containers (~300ms). Another downside is losing container state: for example, python plugin requires virtualenv module installed in order to create a virtual environment, but this also means that both commands (installation and env creation) must be executed in the same container (so, in my case, it was simply impossible to always use fresh containers).

The advantage of this approach is that we can receive command logs immediately (withLogConsumer). This is important for long running (or endless) processes, like mkdocs dev server. So I have to provide such an option for python tasks. In my experiments, it takes about 10 seconds for ryuk (shutdown container) to properly remove all started containers after emergency gradle stop (IDE stop or ctrl+d in console).

Testcontainers has many options to detect container readiness, but in my case the simplest timeout was enough (just wait and assume container is running): withStartupTimeout(Duration.ofSeconds(1)).

It is also possible to know the exact exit code for executed command, but in a complex way:

int exitCode = container

.getDockerClient()

.inspectContainerCmd(container.getContainerId())

.exec()

.getState()

.getExitCode();

In-container command

Another approach is starting container with infinite command and execute commands inside it:

GenericContainer container = new GenericContainer(...)

.withCommand('tail', '-f', '/dev/null')

.withStartupTimeout(Duration.ofSeconds(1))

container.start()

try {

Container.ExecResult res = container.execInContainer(StandardCharsets.UTF_8,

new String[]{"python", "--version"})

} finally {

container.stop()

}

The downside is that we can obtain command output only after its execution:

res.getStdout().toString();

res.getStderr().toString();

In python plugin, pip installation tasks are usually long enough to feel discomfort of logs absence, but it is the only way.

In my case, in-container execution was a preferred way for running commands as I have many short-lived python commands and savings on not restarting the container for each command were significant.

It is important to note that you can configure container (working dir, environment variables and port bindings) only during container startup:

new GenericContainer(...)

.withWorkingDirectory(workDir)

.addEnv("SOME_ENV", "value")

.withExposedPorts(8080) // more on this below

For example, when you need a different working directory, you'll have to restart the container. In case of a python plugin, each python task could declare its own directory, environment and ports and so, to properly support this I have to validate current container parameters with the upcoming command requirements and restart container automatically when necessary.

That caused unavoidable restart for mkdocs plugin: python environment tasks use root project dir as working directory, but all mkdocs tasks use mkdocs sources directory as home and so in any case container have to be restarted (but single restart is not a problem).

Custom container

Most likely, you'll have to create your custom container class because:

- It is the only way to do static port bindings

- It is the only way to hide container errors in logs

class CustomContainer extends GenericContainer<CustomContainer> {

PythonContainer(String image) {

super(DockerImageName.parse(image))

}

}

Startup errors logging

If container startup fails, testcontainers will throw an exception and log it together with recorded output. This is very handy in the test environment, but not desired in the plugin because it would duplicate the plugin's own logs (besides, in case of python plugin, python command failure might not be a problem at all).

The only way to hide these logs is to override logger creation in custom container class:

@Override

protected Logger logger() {

// avoid direct logging of errors (prevent duplicates in log)

return NOPLoggerFactory.newInstance().getLogger(CustomContainer.name)

}

Note: without the --stacktrace option, gradle would show only the top-most exception message in the console (as a reason for failure). Testcontainers exceptions are hierarchical: for example, Container error -> Container failed to start -> Port 8080 already in use. In order to bring more clarity for the end used, it is better to collect all errors in the hierarchy and put it the top-most exception.

try {

container.start()

} catch (Exception ex) {

String msg = // collect all messages through hierarchy

throw new GradleException("Container startup failed: \n" + msg);

}

Port bindings

By default, testcontainers use random port numbers: when you expose port with .withExposedPorts(8080) it would be actually bound to a random and free host port.

You can obtain the actual assigned port using container.getMappedPort(8080).

This is perfect for tests, but in case of gradle plugin, fixed port bindings are required.

Testcontainers provide FixedHostPortGenericContainer to allow using fixed mappings, but it is deprecated (because it's not recommended). If we look into implementation we'll see that GenericContainer already contains protected method withFixedExposedPort, and so we can simply add a public method in our custom container class and use it:

CustomContainer withFixedExposedPort(int hostPort, int containerPort){

super.addFixedExposedPort(hostPort, containerPort, InternetProtocol.TCP)

return self()

}

And now ports binding would look like:

new CustomContainer(...)

.withFixedExposedPort(8080, 8080) // container 8080 to host 8080

.withFixedExposedPort(9000, 9090) // container 9090 to host 9000

Putting it all together

For python plugin requirements appear to be:

- As plugin execute a lot of python commands, one container should be used for all of them

- There must be an ability to run command in "exclusive" container for long-running tasks (for live logs)

- Fixed ports bindings required (much simpler to use in plugin then random ports)

- Containers are stateless - they must be destroyed after execution (some "caches" might be stored in project directory (like virtualenv))

- Project directory mapped into container

- Root user rights fix required

- Automatic path conversions required (in case of plugin it might be used directly with installed python and with docker). This also assumes OS specific corrections (linux containers could be started from windows).

Simple wrapper could easily abstract all docker-related staff:

public class ContainerManager {

private String image;

private CustomContainer container;

public ContainerManager(String image) {

this.image = image;

}

public void start() {

if (container == null) {

(container = createContainer())

// container with infinite command

.withCommand('tail', '-f', '/dev/null')

.start();

}

}

public void stop() {

if (container != null) {

container.stop();

container = null;

}

}

public void exec(OutputStream out, String[] command) {

// note: command might need paths re-mapping (to convert native paths to docker paths)

Container.ExecResult res = container.execInContainer(StandardCharsets.UTF_8, command);

// delayed output

if (res.stdout != null ) out.write(res.stdout.getBytes(StandardCharsets.UTF_8))

if (res.stderr != null ) out.write(res.stdout.getBytes(StandardCharsets.UTF_8))

// check correctness

if (res.exitCode != 0) {

throw new IllegalStateException("Execution failed")

}

}

public void execExclusive(OutputStream out, String[] command) {

// simply starting new container exclusively for one command

CustomContainer cont = createContainer()

try {

cont.withCommand(command)

// immediate logs streaming

.withLogConsumer { OutputFrame frame -> out.write(frame.bytes == null ? new byte[0]: frame.bytes) }

.start()

// wait for execution to finish (interruption check detect gradle task interruption)

while (cont.isRunning() && !Thread.currentThread().isInterrupted()) {

sleep(300)

}

} catch (Exception ex) {

// hide error

} finally {

cont.stop(); // for emergency ending (e.g. after ctrl + d)

}

// in case if you need exact exit code, otherwise exception above will always mean error

int exitCode = container.getDockerClient().inspectContainerCmd(container.getContainerId())

.exec().getState().getExitCode();

if (exitCode != 0) {

throw new IllegalStateException("Execution failed")

}

}

private CustomContainer createContainer() {

return new CustomContainer(image)

.withStartupTimeout(Duration.ofSeconds(1))

// just an examples (variables declarations omitted)

.withFileSystemBind(projectRootPath, projectDockerPath, BindMode.READ_WRITE)

.withWorkingDirectory(projectDockerPath)

.addEnv("SOME_ENV", "12")

.withFixedExposedPort(8080, 8080)

}

}

Note that this is not a working solution - just highlighting of the main moments (in real case, you'll have to take synchronization into account and properly implement container configuration).

My intention was only to show the main paths and pitfalls of how testcontainers could be used to relatively easily implement docker executions.

If you would need to do a gradle plugin for python module, you can use python plugin directly and get docker support out of the box (see mkdocs plugin implementation as reference).

Or see python plugin implementation for more implementation details.